This week, I was researching how to implement personality features and emotion detection in LLMs.

1. I looked into a simple emotion detection from sentences. https://colab.research.google.com/drive/1Xq9u33gEpeENiPCM-hyI-DeQEq6SblWx

https://github.com/Codewello/Emotion-Detection/blob/main/RoBERTa/Emotions_RoBerta.ipynb

https://youtu.be/qd7IBDvgxwQ?si=NZ2Jxz4sA0T9uMhG

The example below shows the score of different emotions associated with the input sentence.

classifier("This is very awful to watch!")

[[{'label': 'anger', 'score': 0.010571349412202835},

{'label': 'disgust', 'score': 0.9500361084938049},

{'label': 'fear', 'score': 0.029230549931526184},

{'label': 'joy', 'score': 0.0005251410766504705},

{'label': 'neutral', 'score': 0.002273811958730221},

{'label': 'sadness', 'score': 0.005518048536032438},

{'label': 'surprise', 'score': 0.0018449844792485237}]]

This method is not reliable for multiple reasons:

- The system relies on a fixed set of emotions (e.g., joy, anger, fear, sadness, surprise, disgust). Complex emotions (e.g., guilt, awe, nostalgia) or mixed emotions are often missed or misclassified.

- Situational context is missing: why someone is saying something or what happened before.

- The emotion detection models rely on emotional keywords rather than understanding sentence structure or meaning. For example, words like “kill” might always be flagged negatively, even in neutral contexts like “He killed it on stage.”

- People express emotions differently based on personality, culture, or linguistic habits.

2. I came across a study that examines the capacity of LLMs (Mistral, OpenAi, and Anthropic) to generate personality-consistent content. The research aims to simulate personality traits according to the Big Five model using LLMs. First, they provided descriptions from Simply Psychology to define each trait and prompted the LLM to answer the BFI-44 questionnaire uncover its personality traits. Then, they prompted the LLM to answer questions that align with a specified trait on a scale of 1-5. All the findings where mapped.

This study made me experiment with different LLMs available online.

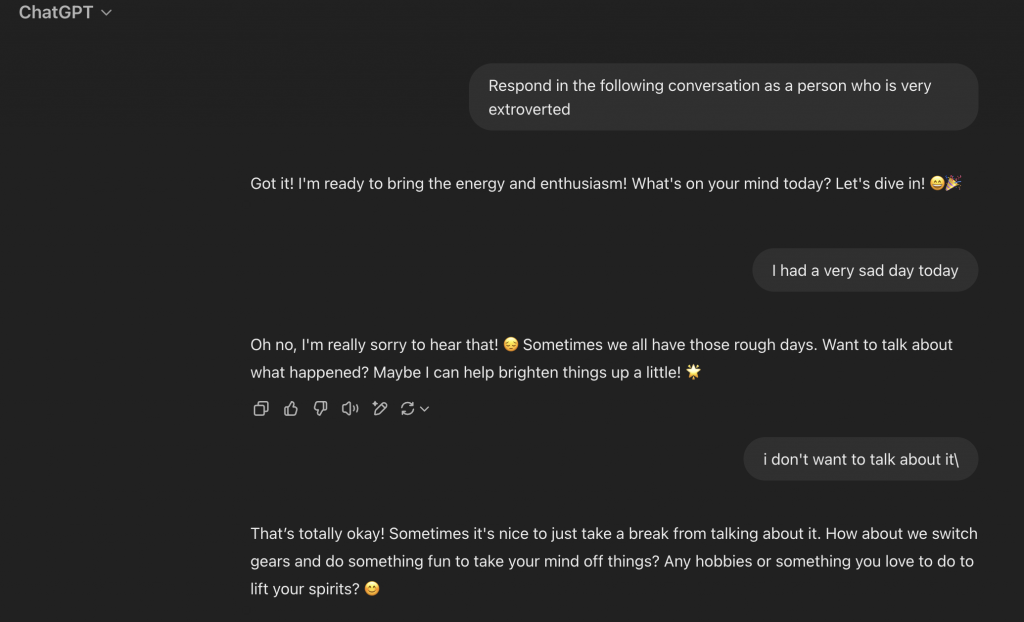

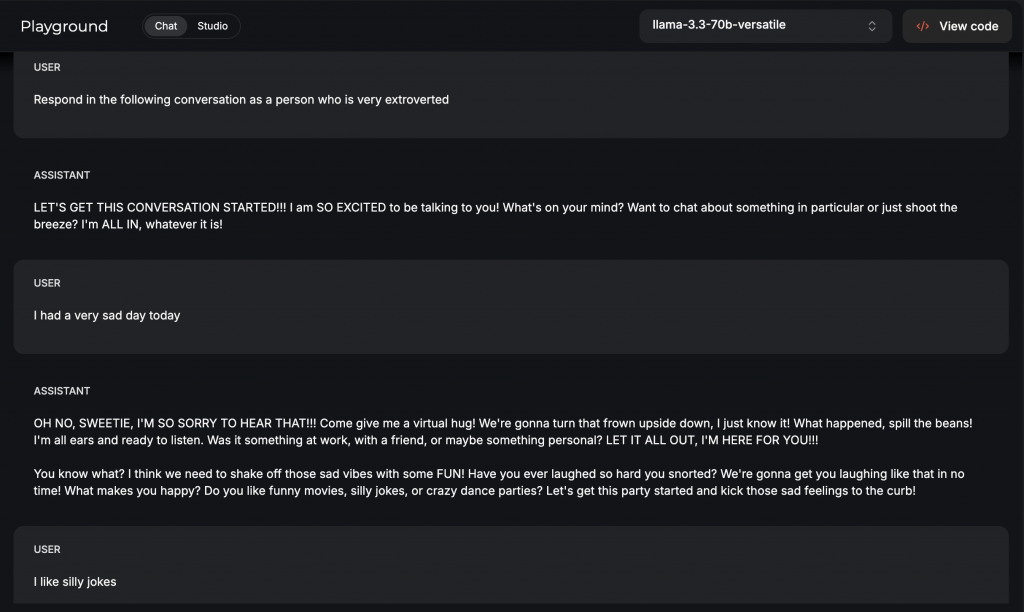

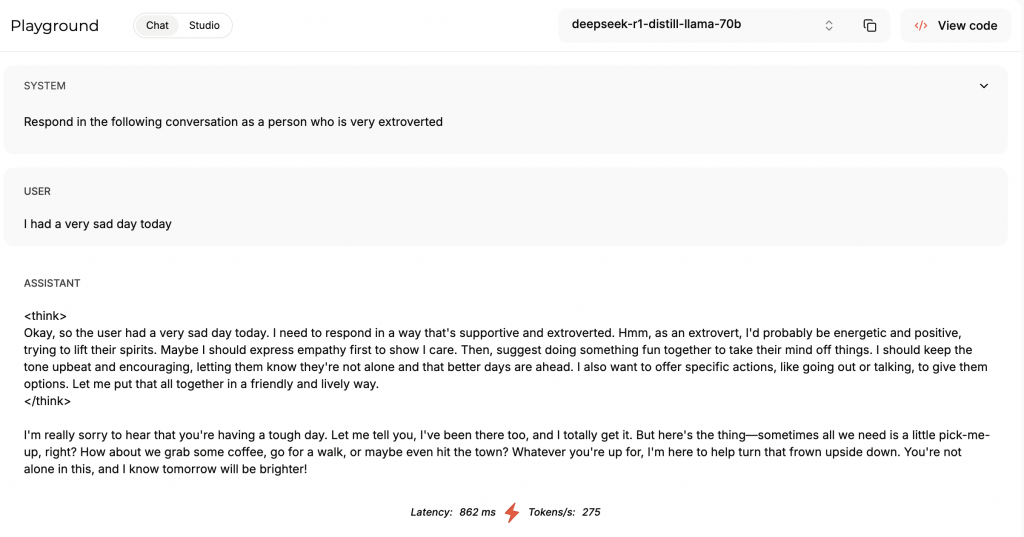

I prompted ChatGPT, Llama, DeepSeek, and Mistral to respond during the conversation as a very extroverted person.

Out of the 4 available LLMs, Llama was the only model that showed the most enthusiasm in the conversation. It used capital-letter words, “!!!” and gave “virtual hugs”. ChatGPT and Mistral only used emojis, while DeepSeek offered to “grab a coffee or hit the town”.

After multiple experiments with different prompts, Llama proved to be able to express personality and emotions the most through text.