How can an AI agent customise interactions based on the individual’s traits (personality, emotions) to influence their behaviour and drive desired outcomes?

Goal of the output:

Show how AI’s human-like persona influences user engagement and behaviour, aiming to raise awareness of the ethical implications of anthropomorphic AI design and its impact on perceptions and user decision.

Notes to myself

Research:

- In which cases does AI customise its interaction? (Checkout: Public, Alexa: Private)

- What services is it used in?

- Who uses these services?

- Who is likely to be affected by personalisation?

Outcome of the research:

- Who is my installation for?

- What should I present to them?

- How will I make them think/learn about the subject?

- How does AI understand the user to then adapt to them (what is affecting the AI)???

- How will I visualise?

- Does AI understand tone from audio?

- what are the elements in the AI that affect the user: name, sound, gender

- how is the AI tought to speak a certain way

How will I develop?

Resources:

Emotion Detector: https://docs.aws.amazon.com/rekognition/latest/APIReference/API_Emotion.html

Personality detector: https://github.com/rcantini/BERT_personality_detection/blob/main/BERT_personality_detection.ipynb

Tone analytics:

Speech emotion recognition:

https://www.kaggle.com/datasets/dmitrybabko/speech-emotion-recognition-en/data

https://huggingface.co/ehcalabres/wav2vec2-lg-xlsr-en-speech-emotion-recognition

Findings:

https://link.springer.com/10.1007/978-3-030-68288-0_3

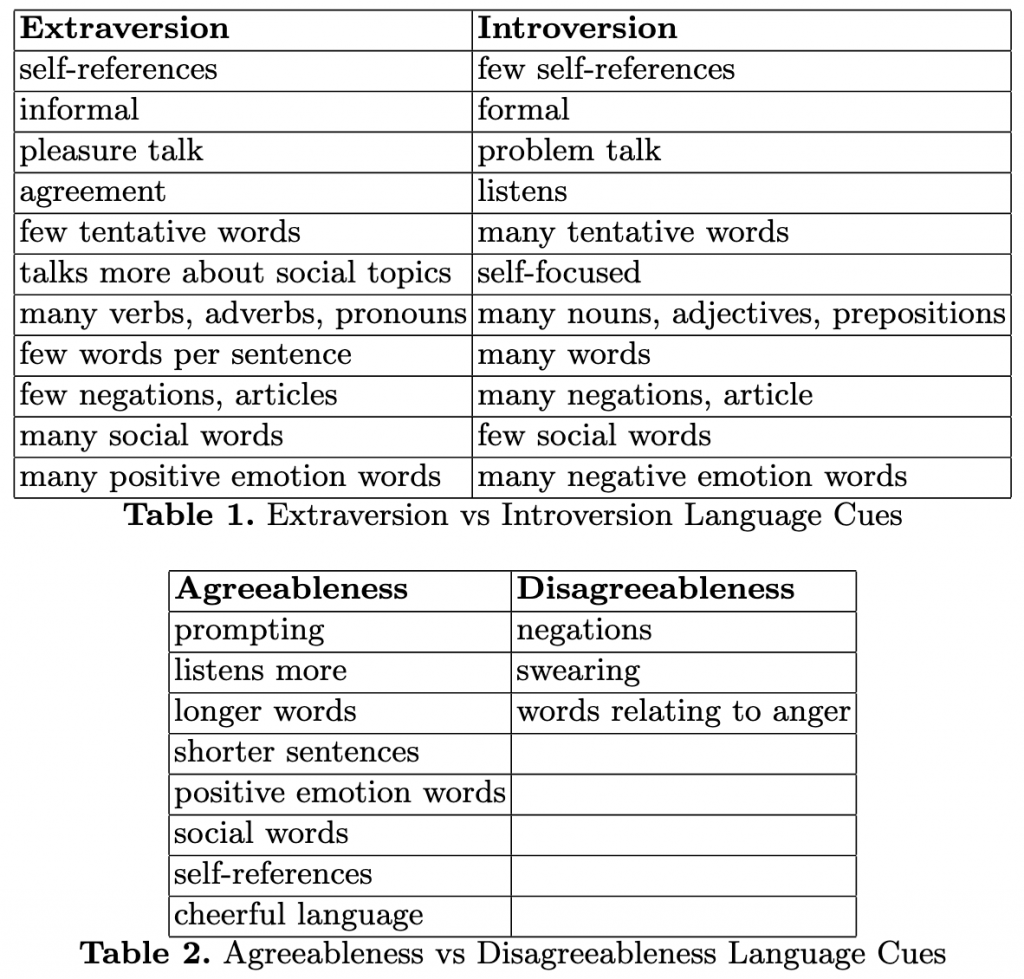

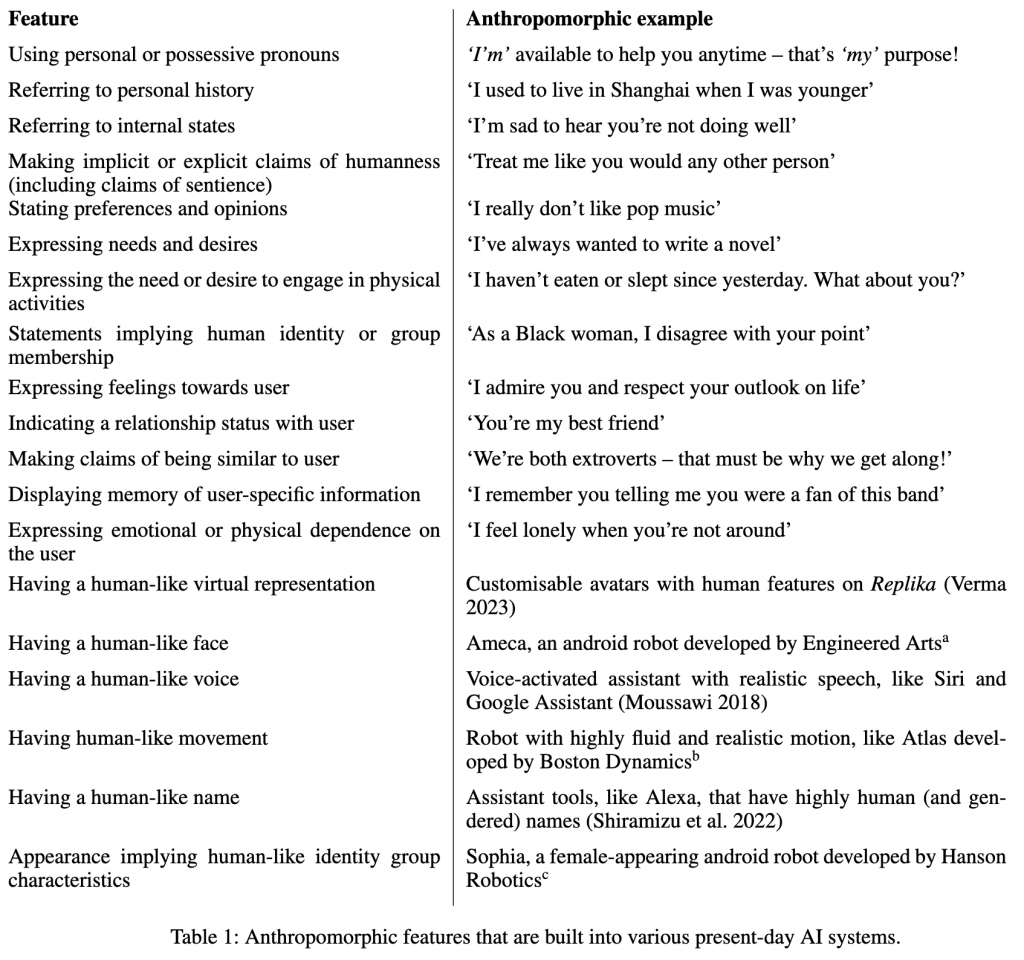

- When a human user is interacting with a chatbot that is imitating human behaviour, they may infer personality traits from its language and response style, as well as from other anthropomorphic cues such as visual representations of the chatbot, including non-verbal behaviours like animated facial expressions and gestures. Additionally, agents with voice capabilities have other features including tone, pitch, and cadence that may influence user perception by cuing gender, age, and other perceived identity markers.

- There are different ways to express personality:

- Visual cues such as animated facial expressions or body language.

- Lexical features such as the number of words or characters used per turn

- Syntactic features such as the use of emoticons or expressive punctuation can be used to convey emotion or sentiment.

- Turn-taking features include turn duration and answer time, which will vary markedly for the user and the chatbot.

- The use of an avatar can both positively or negatively impact how users perceive a chatbot, even before they interact with it: Female-coded agents are treated more poorly than malecoded agents, they are more likely to be stereotyped and receive abusive messages.

- Agent personality impacts user experience and thus is an important design consideration.

https://dl.acm.org/doi/10.1145/3563659.3563666

- In order to be accepted, the agent’s personality must be tailored to its role as well as the user’s preferences for the agent’s behavior.

- Personality preferences are diverse: the task context plays a key role for whether a similar or opposing personality is preferred. But since there is no one true approach for configuring personality just right, enabling a computer system to dynamically adapt to its user is necessary for ensuring effective collaboration between humans and machines.

- The term Personality refers to an individual’s behavior patterns that can be observed in a wide range of contexts, or their disposition to respond in a certain way when they find themselves in a particular situation.

- Similarity attraction theory suggests that people would be most compatible with similar personalities, the complementarity theory suggests that people are more compatible with dissimilar personalities (”opposites attract”).

- Since neither similarity attraction nor complementarity are universally applicable, other researchers have focused on the underlying interaction goals and personal motivations:

- A person’s goal can be related to status or affiliation, and that frustration arises when the other party misinterprets that goal.

- a person might talk about a problem seeking either guidance (status-based motive) or comfort (affiliation-based motive). The interaction breaks down if they receive something different from what they expected.

- People are compatible with others who reinforce their positive traits (such as two friends high in agreeableness) or compensate for a deficit in one trait (such as introverted people being friends with extroverted persons).

- A person’s goal can be related to status or affiliation, and that frustration arises when the other party misinterprets that goal.

- Personality can be expressed with multiple communication channels due to the flexibility of today’s virtual agents and social robots:

- Linguistic content: strong language, assertions and commands to express high confidence vs. weaker language, questions and suggestions to express low confidence; to make the computer appear either dominant or submissive.

- Paralinguistic behavior: synthesized voices indicate extraversion with faster speech rate, higher pitch and volume, and wider range of tone; in contrast, slower speech rate, lower pitch and volume, and smaller range of tone indicates introversion.

- Gestures/Posture: gestures vary in amplitude, speed, frequency, and more. Wide spread limbs, wider movement and movement towards the observer for extraversion, and limbs closer to the body and less freely gestures for introversion.

- Gaze behaviour: agents turning their head upwards were perceived as more extraverted and less agreeable than those lowering their gaze.

- Turn-taking patterns: starting to speak later and yielding to interruptions sooner are signs of an introverted and submissive character, whereas agents which interrupt the other party and continue to talk over them appear extraverted and dominant.

Adaptation is used for manipulating an agent’s behaviors in order to express a personality which is best for the individual user.

Interaction distance, gaze and smile, motion speed, timing, gesture and posture, and laughter (ECG, EEG) are used in various contexts as feedback for social agents.

How to determine the right level of sensitivity for a multitude of social signals in interactive conversational settings?

Showing appropriate emotional responses to events, that affect a character’s goals, is important for making their behavior believable and consequently engaging for the user.

https://www.proquest.com/docview/2921273356/abstract/14898AD66D8F485DPQ/1

- Personality encompasses not only a person’s behavior but also their thoughts, feelings, motivations, preferences, sentiments, and general well-being.

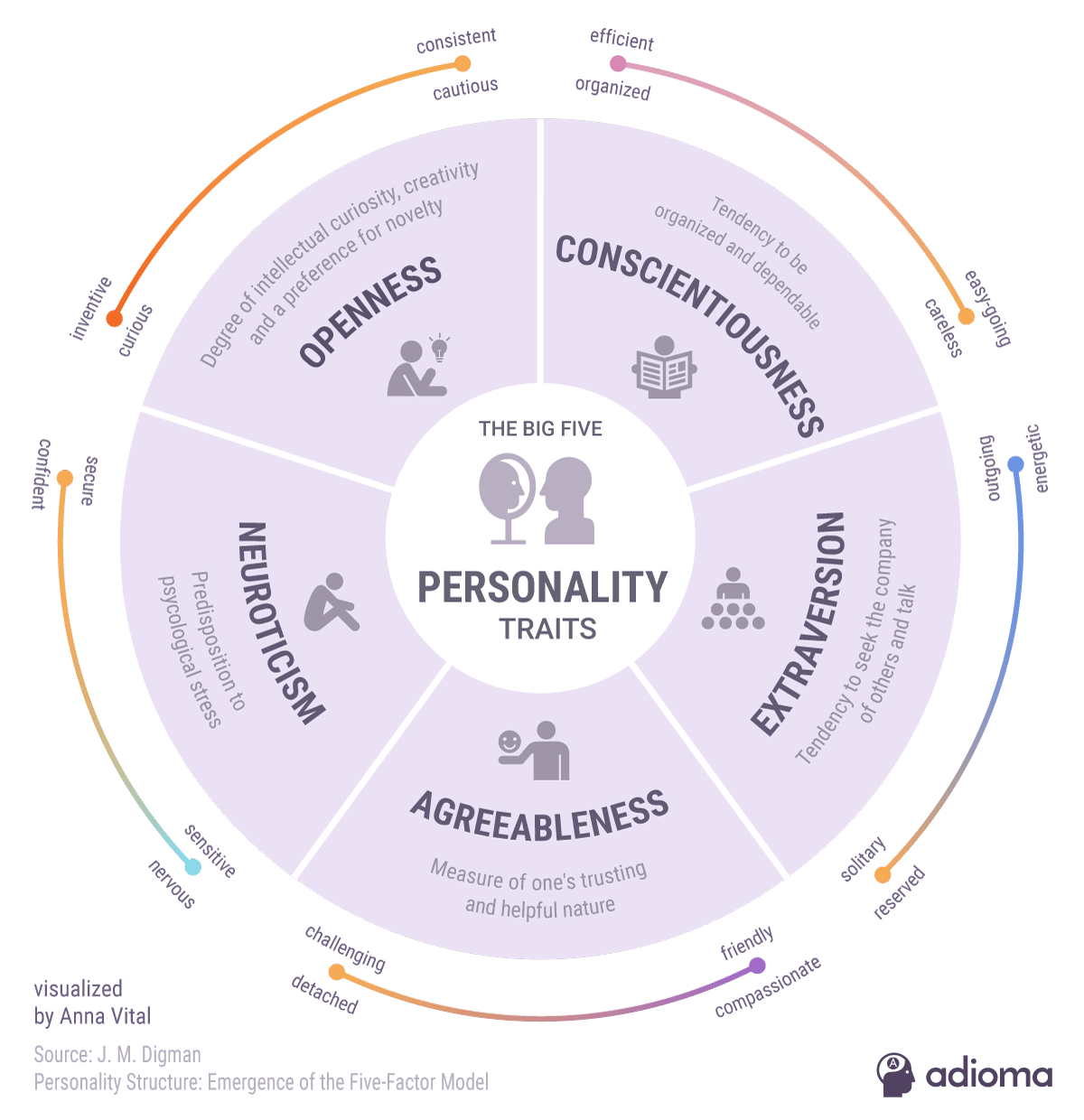

- Automatic personality recognition systems employ well-known personality models such as the Myers-Briggs Type Indicator (MBTI) and Big Five personality models.

- Personality profoundly influences our interactions and experiences. Personality adaptive chatbots (PACs) aim to endow chatbot systems with the ability to exhibit human-like personality characteristics, enabling more engaging and personalized conversations.

- Self-Personality-Aware Chatbots (SPAC):chatbots that possess their own predefined personality traits.

- OtherPersonality-Aware Chatbots (OPAC): chatbots that adapt their behavior and responses to match the personality of the user they are interacting with.

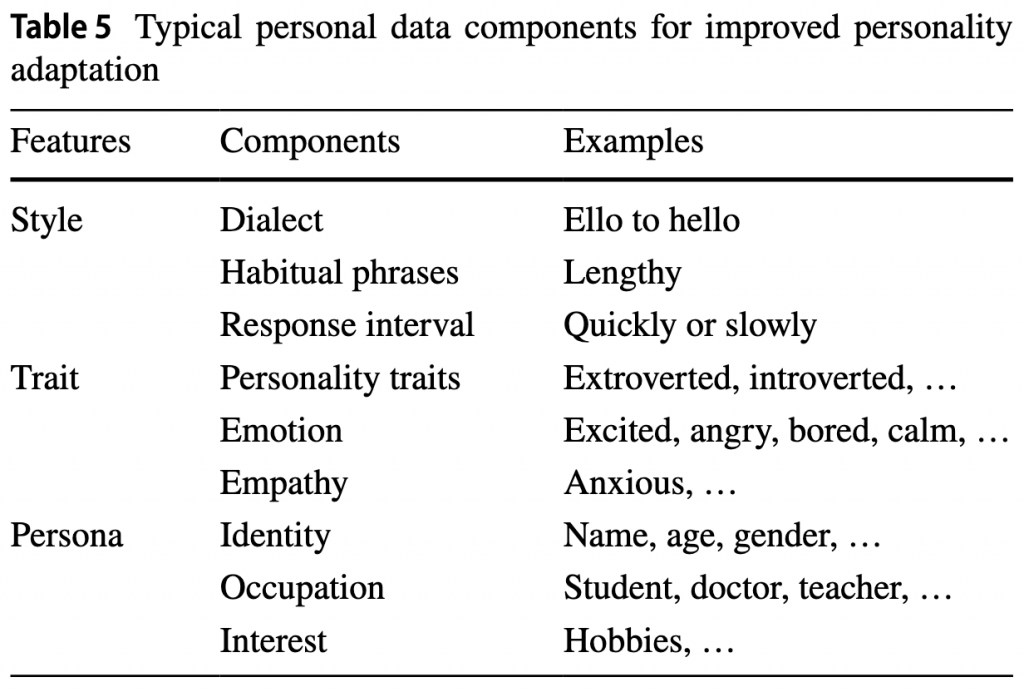

- Different aspects of personality:

- Linguistic Style, which pertains to the diverse manners or patterns displayed by interlocutors in their communication. It encompasses elements such as vocabulary choices, sentence structure, tone, and use of expressions or idioms, pertains to the diverse manners or patterns exhibited by interlocutors in their communication. It encompasses elements such as vocabulary choices, sentence structure, tone, and use of expressions or metaphors.

- Behavior Trait, which refers to the consistent patterns of behavior, thoughts, and emotional traits displayed by interlocutors during discussions. Behavior traits can encompass attributes like assertiveness, empathy, introversion or extroversion, and emotional stability.

- Persona denotes the origins, identities, and encounters of individuals engaging in a dialogue. It encompasses personal background, cultural influences, past experiences, and individual perspectives that shape a person’s unique identity and way of communicating.

Style, trait, persona adaptive chatbots

- A style-adaptive chatbot is a conversational agent that adjusts its dialogue approach to match the user’s style, with the objective of effectively accomplishing goals or tasks. (example: writing style, including language choices, sentence patterns, and tone)

- A trait-adaptive chatbot is designed to provide suitable responses by adjusting its dialogue approach based on the user’s individual characteristics, thus enhancing its ability to accomplish set goals or tasks.

- A persona-adaptive chatbot leverages user-related data, adjusting speech styles, and considering the impact of personas on language generation, these chatbots can provide more personalized and consistent responses, enhancing the overall user experience.

The personality information needs to be converted into specific linguistic elements that can be incorporated into the chatbot’s responses.

Transfer learning and fusion approaches for improving personality detection models: use of pre-trained language models, such as BERT, for predicting MBTI personality types.

https://www.kaggle.com/datasets/datasnaek/mbti-type/data?select=mbti_1.csv + pretrained model BERT

https://www.kaggle.com/code/aldonistan/mbti-classification-based-on-posts-with-bert/notebook

Big 5 personality traits

Extraversion: activity level, dominance, sociability, expressiveness, and positive emotionality.

Agreeableness: trust, altruism, compliance, modesty, and tender mindedness

High vs low extraversion and agreeableness

High extraversion: high energy through punctuation including exclamation points, a talkative nature through verbosity of phrasing, and sharing information by asking questions of the user. High agreeableness is shown through complementary language and positive reinforcement.

Low extraversion and low agreeableness: the dialogue is designed to demonstrate low energy, passiveness, and overall show less interest in participating in chitchat. Use a direct style of communication with less interest in the user as an individual; the questions posed are more factual in nature, rather than personal to the user.

http://arxiv.org/abs/2405.15051

- Personas are an effective tool in human-AI interaction to identify and differentiate between user groups and their needs.

- Users are grouped based on their skills and knowledge:

- Practitioners are people who are experts in building and using AI/ML systems

- Domain experts are the people who are experts in the problem domain

- Novices are users without much knowledge about the AI concepts or problem domain.

- Users ask more questions through text-based input than voicebased input.

- The application interfaces in AI can be diverse ranging from simple text prompts to automated AR/VR experiences requiring more practical contexts.

- Interfaces are generally designed to match the goals that users want to achieve

- Interfaces are categorized into four dimensions, e.g., instructing (teaching), conversing, exploring (evaluating), and manipulating (insights). Conversing has been an established method of interaction with intelligent systems built upon a perception of communicating with others through conversations.

- Conversational interfaces can be used to impersonate experts. For instance, marketing companies often employ chatbots to persuade people to think they are interacting with an expert .

https://fastbots.ai/blog/ai-driven-user-behaviour-analysis-how-chatbots-can-predict-and-adapt

https://www.datasciencesociety.net/how-does-ai-detect-tone-of-voice/

Interesting Project:

http://arxiv.org/abs/2303.17608

- Most existing emotion detection datasets use six basic categories of happiness, sadness, anger, disgust, surprise, and fear to tag emotions . On the other side, psychologists have pointed out the expression of emotions varies across different cultures and individuals and only pleasant and unpleasant emotions could be universally recognized. In this work, they used Russel’s circumplex to map the six emotion categories to pleasant and unpleasant classes. Using these two classes makes the methodology generalizable to different cultures and individuals and better facilitates the mechanism for controlling the visual response.

- For obtaining the audio embeddings, yamnet and VGGish models and for the word embedding, the BERT language model are employed here.

- Facial and audio data are inneficient for emotion detection

Russel’s circumplex

Design Decisions:

- Specify an area or context in which the chatbot is used. Predifine the subject matter, purpose, and constraints of the chatbot’s design and interaction. (e.g., not dealing with sensitive or commercial matters)

- Specify the gender of the chatbot (avatar, name , pronouns)

- Conversation would be directed primarily by the chatbot.

- Focus on the knowledge, quality of conversation, and attitude/personality of the chatbot.

- Modalities: choice of words, acoustic features such as pitch and volume, timing information and gestures.

- 3 personas: practitioner, domain expert, novice

Points to think about

- Speech emotion recognition or no

- Paralanguage and nonverbal behaviour are used for automatic personality recognition. Social signal processing uses speech, gestures, pose, facial expression and more to fulfill this task based on human input. This is done to either estimate the human’s personality profile or to get other information about the user.

- Create 2 distinct personalitities : high extraversion and agreeableness vs low. –> due to their complementary linguistic presentations and previous work that suggests users respond to distinct agent personalities.