Note

Based on the beneficiaries section and literature review these are the points needed to be addressed in the questionnaire to meet the study’s goals:

- Perception of AI intentionality and intelligence

- Influence of agent adaptability on user engagement

- Influence of AI embodiment on users’ perception of reliability and trustworthiness

- Emotional connections with AI agent

- Trust in AI based on anthropomorphic design

- Influence on user decision-making and behaviour

- Perceived authenticity vs. manipulation risk

- User comfort with different AI embodiments

- Emotional expressiveness vs. perceived manipulation

- Preference for customisable vs. static AI designs

I conducted initial research on different validated questionnaires I could use in my study.

The questionnaires below are from https://arxiv.org/html/2403.00582v1 :

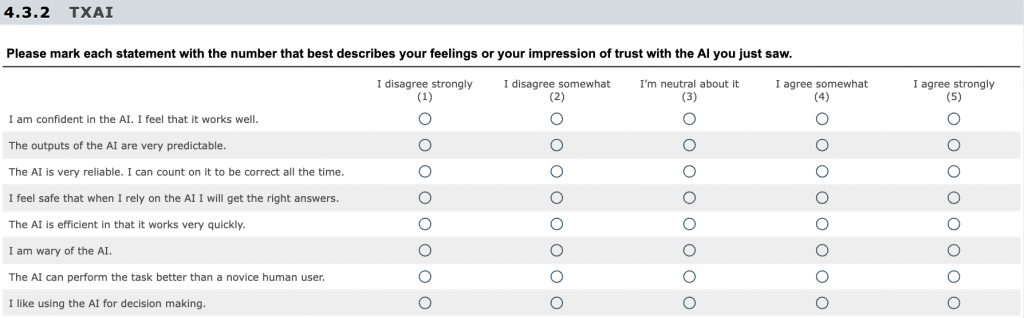

1. Trust Scale for the AI Context (TAI):

This questionnaire consists of eight items, with one negatively formulated item, all presumably capturing trust.

Researchers and practitioners can use the TAI to assess trust in AI as a single-dimensional construct, ranging from low to high levels of trust along a continuous scale. To ensure reliability and validity, the questionnaire should closely match the version validated in this study, including the exact item wording provided in the table below and a five-point Likert-type response scale with the corresponding options.

I won’t use this questionnaire because it is primarily focused on the technical reliability and efficiency of the AI, emphasising performance and accuracy at the task level, which doesn’t cover the emotional, psychological, and anthropomorphic aspects of AI agents which is the focus of my research.

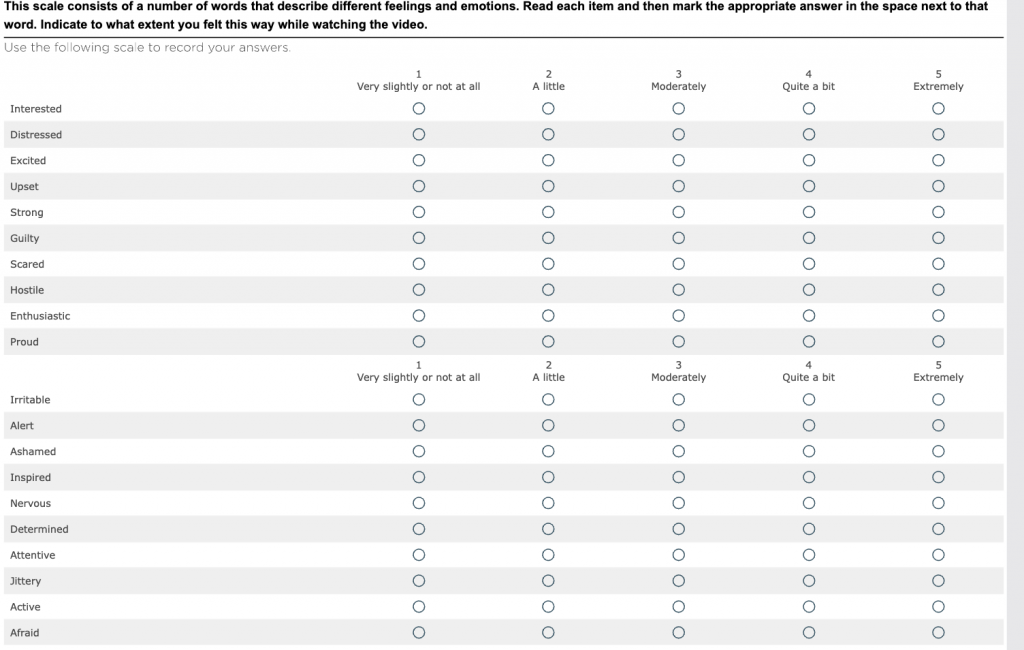

2. Positive Affect Negative Affect Scale (PANAS):

The PANAS is used to assess the current emotional state, mood, or even general emotional tendencies of individuals. It was used in the study to measure people’s positive and negative affect experienced while seeing the AI interaction.

Validated scale used across different studies.

I won’t use the PANAS questionnaire in my study because, while it includes some relevant elements like “interested,” “excited,” and “alert,” it primarily measures general emotional states without capturing the specific nuances of user-AI interactions, such as emotional connections, trust, and comfort with different embodiments.

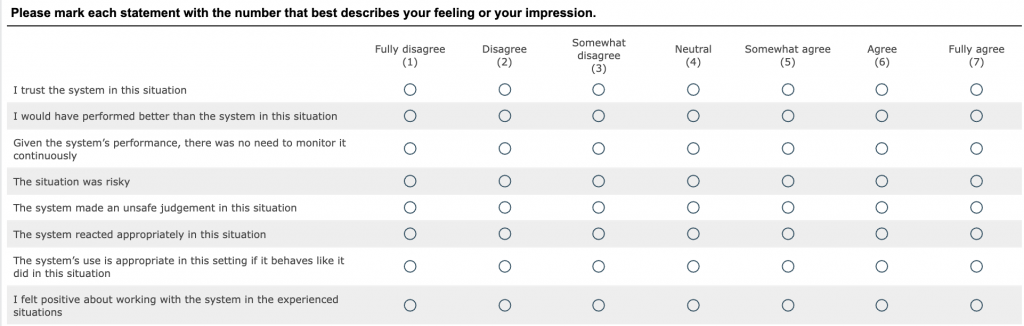

3.Situational Trust Scale (STS):

The STS assesses situational trust in AI systems in general.

This questionnaire focuses on assessing user trust and perceived system reliability in task-oriented contexts. It emphasises performance, safety, and appropriateness in specific scenarios, which is not entirely aligned with my study’s focus on the anthropomorphic influences of AI avatars in interactive, personalised settings.